Friday, 26 January 2018

Tuesday, 23 January 2018

Supported KMS Servers with vSAN 6.6

To use the vSAN Encryption feature KMS Server is needed. One should know what are KMS Servers supported by vSAN 6.6. To Know this, it is always recommended to check VMware Compatibility Guide. Link for the Compatibility Guide is <<<<<here>>>>>

Remember to get the latest info always refer this Compatibility Guide

Remember to get the latest info always refer this Compatibility Guide

vSAN Ready Nodes - AF Series and HY Series

vSAN Ready Node provides the most flexible hardware options to build Hyper-Converged Infrastructure based on VMware Hyper-Converged Infrastructure Software.

AF = All Flash

HY = Hybrid

Download vSAN Ready Nodes Wallpaper from here:-

https://www.vmware.com/resources/compatibility/pdf/vi_vsan_rn_guide.pdf

Get vSAN Ready Nodes Info from here:-

https://vsanreadynode.vmware.com/RN/RN

Sunday, 21 January 2018

Vembu BDR Suite v3.9.0 is now Generally Available and includes Tape Support & Flexible Restores

It is highly important that data needs to be backed up and there should be an effective Disaster Recovery plan in case of data threat or a catastrophe. While data continues to grow and there are number of technology providers who offer better and comprehensive storage techniques to businesses, there has not been an alternative to the concept of backup. While costs are a major factor for businesses, having a steady backup plan to counter data threats and compliant to strict regulatory standards(including the upcoming EU’s GDPR) is necessary. Be it virtual environment backup like VMware Backup, Microsoft Hyper-V Backup or legacy environment backup like Windows Server Backup, Workstation backup, Vembu BDR Suite has been offering Backup & Recovery with their own file-system, VembuHIVE thereby easing the backup process, storage management at an extremely affordable pricing.

Last week, they did announce the release of Vembu

BDR Suite v3.9.0 which offers manifold features and enhancements to meet the

different needs of Diverse IT environments. According to them, the overall goal

of the new version v3.9.0 is to provide advancements in terms of Storage,

Security, and Data Restoration.

Vembu BDR Suite v3.9.0 release is distinct because a

number of critical features are incorporated for maintaining business

continuity and to function effectively for high

availability. Here are some of the key highlights of this release:

Tape Backup

Support

Vembu now provides

the popular 3-2-1 backup strategy( copies of backup in 2 medias(Disk and Tape)

and 1 backup copy at offsite) to businesses by announcing the support for Native Tape Backup for Image-based Backups (VMware, Hyper-V, and

Physical Windows Servers & Workstations) providing an option for Long-Term

Archival and Offsite storage. Also,

Vembu Tape Backup Support makes the DR possible on any physical or virtual

environment. Thus Vembu Tape backup is designed keeping in mind the future

needs of the ever evolving IT demands.

Quick VM

Recovery on ESXi host for Hyper-V and Windows Image Backups

As

we speak of data backup, recovery of data is equally important, if not more.

While data recovery is crucial, the amount of time taken to restore data

decides the business continuity of any organization. In the previous versions,

Vembu has provided instant recovery capabilities only for VMware Backups from

the GUI. From this release v3.9.0, Vembu makes the instant recovery process

much simpler and quicker than before by making the Quick

VM Recovery possible on VMware ESXi from Vembu BDR backup server

console for all image-based backups(VMware, Hyper-V and Microsoft Windows).

Thus, Vembu lowers the Recovery Time Objectives of the organizations and

provides quicker data regain and access.

Backup-level

Encryption

With

the newest release, Vembu provides the ability to encrypt the data while

creating a backup job. Each

backup job that is configured from the distributed agents or through the Vembu

BDR backup server is now highly secured through Backup-level

Encryption. By using customized passwords, users will now be able to

enable additional security for their backup jobs. And the backup data can be

restored/accessed only by providing the password. Thus, the data is encrypted

and can only be accessed by authorized users. This step is to fight data

threats and also to ensure data compliances.

Auto

Authorization at Vembu OffsiteDR Server

Offsite data protection is critical in terms of

business continuity and is primarily done to keep a backup instance of key

business data. To increase data security, Vembu BDR Suite v3.9.0 has Auto Authorization

feature at Vembu OffsiteDR server that lets only the

registered BDR backup servers to connect to the OffsiteDR server. Vembu BDR

servers are authorized through unique registration key generated at the

OffsiteDR server. Thus, safeguard all your backup data even if they are

transferred to offsite through Auto-Authorization at OffsiteDR server

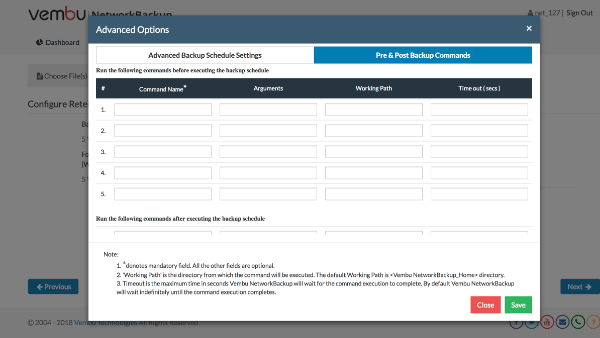

Many businesses are in a need to

execute certain business logic before or after a backup job. But running these

logics manually through scripts is difficult and is not feasible for

organizations having multiple backup jobs running in their IT infrastructure.

To make this process simpler, Vembu BDR Suite v3.9.0

provides a separate wizard in NetworkBackup, OnlineBackup and ImageBackup

clients, where one can add a

number of pre and post executable commands/scripts. This helps in automatically

executing the added commands/scripts at specific stages based on the

configuration and provides the ability to run the custom actions

before/after the backup schedules.

Besides all the listed features, Vembu BDR

Suite v3.9.0 has few interesting features like Windows Event Viewer Integration

along with some Enhancements.

Interested in trying Vembu BDR suite?, Try Now on a 30-days free trial

Saturday, 20 January 2018

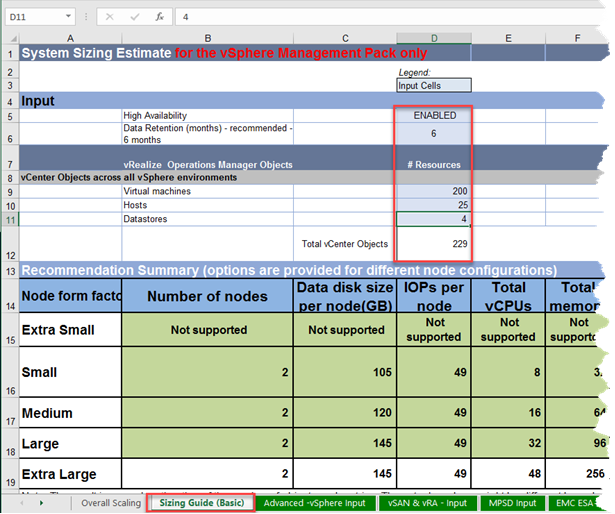

vROPS 6.6 Sizing Guidelines Worksheet

Access KB 2150421 from <<<<<here>>>>>. Scroll Down till the end of KB article and there you will find link for downloading the attachment under attachment category.

vRealize Operations Manager 6.6 and 6.6.1 Sizing Guidelines (2150421)

By default, VMware offers Extra Small, Small, Medium, Large, and Extra Large configurations during installation. You can size the environment according to the existing infrastructure to be monitored. After the vRealize Operations Manager instance outgrows the existing size, you must expand the cluster to add nodes of the same size.

| Characteristics/ Node Size | Extra Small | Small | Medium | Large | Extra Large |

Standard Size Remote

Collectors |

Large Size Remote Collectors

|

| vCPU | 2 | 4 | 8 | 16 | 24 | 2 | 4 |

| Memory (GB) | 8 | 16 | 32 | 48 | 128 | 4 | 16 |

| Maximum Memory Configuration (GB) | N/A | 32 | 64 | 96 | N/A | 8 | 32 |

| Datastore latency | Consistently lower than 10 ms with possible occasional peaks up to 15 ms | ||||||

| Network latency for data nodes | < 5 ms | ||||||

| Network latency for remote collectors | < 200 ms | ||||||

| Network latency between agents and vRealize Operations Manager nodes and remote collectors | < 20 ms | ||||||

| vCPU: Physical core ratio for data nodes (*) | 1 vCPU to 1 physical core at scale maximums | ||||||

| IOPS | See the attached Sizing Guidelines worksheet for details. | ||||||

| Disk Space | |||||||

| Single-Node Maximum Objects | 250 | 2,400 | 8,500 | 15,000 | 35,000 | 1,500 (****) | 15,000 (****) |

| Single-Node Maximum Collected Metrics (**) | 70,000 | 800,000 | 2,500,000 | 4,000,000 | 10,000,000 | 600,000 | 4,375,000 |

| Multi-Node Maximum Objects Per Node (***) | NA | 2,000 | 6,250 | 12,500 | 30,000 | NA | NA |

| Multi-Node Maximum Collected Metrics Per Node (***) | NA | 700,000 | 1,875,000 | 3,000,000 | 7,500,000 | NA | NA |

| Maximum number of nodes in a cluster | 1 | 2 | 16 | 16 | 6 | 50 | 50 |

| Maximum number of End Point Operations Management agents per node | 100 | 300 | 1,200 | 2,500 | 2,500 | 250 | 2,000 |

| Maximum Objects for the configuration with the maximum supported number of nodes (***) | 250 | 4000 | 75,000 | 150,000 | 180,000 | NA | NA |

| Maximum Metrics for the configuration with the maximum supported number of nodes(***) | 70,000 | 1,400,000 | 19,000,000 | 37,500,000 | 45,000,000 | NA | NA |

(*) It is critical to allocate enough CPU resources for environments running at scale maximums to avoid performance degradation. Refer to the vRealize Operations Manager Cluster Node Best Practices in the vRealize Operations Manager 6.6 Help for more guidelines regarding CPU allocation.

(**) Metric numbers reflect the total number of metrics that are collected from all adapter instances in vRealize Operations Manager. To get this number, you can go to the Cluster Management page in vRealize Operations Manager, and view the adapter instances of each node at the bottom of the page. You can get the number of metrics collected by each adapter instance. The sum of these metrics is what is estimated in this sheet. Note: The number shown in the overall metrics on the Cluster Management page reflects the metrics that are collected from different data sources and the metrics that vRealize Operations Manager creates.

(***) In large, 16-node configurations, note the reduction in maximum metrics to permit some head room. This adjustment is accounted for in the calculations.

(****) The object limit for the remote collector is based on the VMware vCenter adapter.

What's new with vRealize Operations 6.6.x sizing:

What's new with vRealize Operations 6.6.x sizing:

Monitor larger environment with improved scale: The vRealize Operations 6.6 cluster can handle 6 Extra Large Nodes in a cluster which can support up to 180,000 Objects and 45,000,000 metrics.

Monitor more vCenter Servers: A single instance of vRealize Operations can now monitor up to 60 vCenter Servers.

Deploy larger sized nodes: A large Remote Collector can support up to 15000 objects.

Notes:

- Maximum number of remote collectors (RC) certified: 50.

- Maximum number of VMware vCenter adapter instances certified: 60.

- Maximum number of VMware vCenter adapter instances that were tested on a single collector: 40.

- Maximum number of certified concurrent users: 200.

- This maximum number of concurrent users is achieved on a system configured with the objects and metrics at 50% of supported maximums (For example: 4 large nodes with 20K objects or 7 nodes medium nodes with 17.5K objects). When the cluster is running with the nodes filled with objects or metrics at maximum levels, then the maximum number of concurrent users is 5 users per node (For example: 16 nodes with 150K objects can support 80 concurrent users).

- Maximum number of the End Point Operations Management agents per cluster certified - 10,000 on 4 large nodes cluster.

- When High Availability (HA) is enabled, each object is replicated in one of the nodes of a cluster. The limit of objects for HA based environment is half compared to a non-HA configuration. vRealize Operations HA supports only one node failure and you can avoid Single-point-of-failure by adding vRealize Operations cluster nodes into different hosts in the cluster.

- An object in this table represents a basic entity in vRealize Operations Manager that is characterized by properties and metrics that are collected from adapter data sources. Example of objects include a virtual machine, a host, a datastore for a VMware vCenter adapter, a storage switch port for a storage devices adapter, an Exchange server, a Microsoft SQL Server, a Hyper-V server, or Hyper-V virtual machine for a Hyperic adapter, and an AWS instance for a AWS adapter.

- The limitation of a collector per node: The object or metric limit of a collector is the same as the scaling limit of objects per node. The collector process on a node will support adapter instances where the total number of resources is not more than 2,400, 8,500, and 15,000 respectively, on a small, medium, and large multi-node vRealize Operations Manager cluster. For example, a 4-node system of medium nodes will support a total of 25,000 objects. However, if an adapter instance needs to collect 8,000 objects, a collector that runs on a medium node cannot support that as a medium node can handle only 6,250 objects. In this situation, you can add a large remote collector or use a configuration that uses large nodes instead of small nodes.

- A large node can collect more than 20,000 vRealize Operations for Horizon objects when a dedicated remote collector is used.

- A large node can collect more than 20,000 vRealize Operations for Published Apps objects when a dedicated remote collector is used.

- If the number of objects is close to the high-end limit, dependent on the monitored environment, increase the memory on the nodes. Contact Product Support for more details.

- The performance of vRealize Operations Manager can be impacted by the usage of snapshots. Presence of a snapshot on the disk causes slow IO performance and high CPU costop values which degrade the performance of vRealize Operations Manager. Use snapshots minimally in the production setups.

- The sizing guides are version specific, please use the sizing guide based on the vRealize Operations version you are planning to deploy.

Extra small and small node are designed for test environment and POC, we do not recommend to scale-out small node more than two nodes and we do not recommend to scale out extra small node. - Simply scale up as you scale - Increase memory instead of configuring more nodes to monitor larger environments. We recommend to scale up to the possible maximum (default memory x 2) and then do scale out based on underlying hardware that will support the scale requirements. Example: Large node default memory requirements are 48GB and now, if needed, can be configured up to 96GB. All nodes must be scaled equally.

Friday, 19 January 2018

Portable License Unit: Hybrid Cloud Ready Licensing Metric for vRealize Suite

VMware is introducing Portable License Unit (PLU) for VMware vRealize® Suite that provides flexibility to deploy the same vRealize Suite license across hybrid and heterogeneous environments such as VMware vSphere®-based virtualized environment, third-party hypervisors, physical servers, VMware vCloud® Air™, and all other supported public clouds. PLU combines the benefits of managing unlimited Operating System Instances (OSIs) / Virtual Machines (VMs) on one vSphere CPU or up to 15 OSIs on a supported public cloud using the same license key.

For more info refer:-

https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/products/vrealize/vmware-portable-license-unit.pdf

For more info refer:-

https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/products/vrealize/vmware-portable-license-unit.pdf

VMware Response to Speculative Execution security issues, CVE-2017-5753, CVE-2017-5715, CVE-2017-5754 (aka Spectre and Meltdown) (52245)

Purpose

The purpose of this article is to describe the issues related to speculative execution in modern-day processors as they apply to VMware and then highlight VMware’s response.

For VMware, the mitigations fall into 3 different categories:

This Knowledge Base article will be updated as new information becomes available.

Introduction

On January 3, 2018, it became public that CPU data cache timing can be abused by software to efficiently leak information out of mis-speculated CPU execution, leading to (at worst) arbitrary virtual memory read vulnerabilities across local security boundaries in various contexts. Three variants have been recently discovered by Google Project Zero and other security researchers; these can affect many modern processors, including certain processors by Intel, AMD and ARM:

Operating systems (OS), virtual machines, virtual appliances, hypervisors, server firmware, and CPU microcode must all be patched or upgraded for effective mitigation of these known variants. General purpose operating systems are adding several mitigations for them. Most operating system mitigations can be applied to unpatched CPUs (and hypervisors) and will significantly reduce the attack surface. However, some operating system mitigations will be more effective when a new speculative-execution control mechanism is provided by updated CPU microcode (and virtualized to VMs by hypervisors). There can be a performance impact when an operating system applies the above mitigations; consult the specific OS vendor for more details.

Hypervisor-Specific Mitigation

Mitigates leakage from the hypervisor or guest VMs into a malicious guest VM. VMware’s hypervisor products are affected by the known examples of variant 1 and variant 2 vulnerabilities and do require the associated mitigations. Known examples of variant 3 do not affect VMware hypervisor products.

VMware hypervisors do not require the new speculative-execution control mechanism to achieve this class of mitigation and therefore these types of updates can be installed on any currently supported processor. For the latest information on any VMware performance impact, see KB 52337.

Hypervisor-Assisted Guest Mitigation

It virtualizes the new speculative-execution control mechanism for guest VMs so that a Guest OS can mitigate leakage between processes within the VM. This mitigation requires that specific microcode patches that provide the mechanism are already applied to a system’s processor(s) either by ESXi or by a firmware/BIOS update from the system vendor. The ESXi patches for this mitigation will include all available microcode patches at the time of release and the appropriate one will be applied automatically if the system firmware has not already done so.

No significant additional overhead is expected by virtualizing the speculative-execution control mechanism in the hypervisor. There can be a performance impact when an operating system applies this mitigation; consult the specific OS vendor for more details.

Operating System-Specific Mitigations

Mitigations for Operating Systems(OSes) are provided by your OS Vendors. In the case of virtual appliances, your virtual appliance vendor will need to integrate these into their appliances and provide an updated appliance.

VMware Software-as-a-Service (SaaS) Status Updates

VMware is in the process of investigating and patching its services. The current status is found in the Resolution section.

For VMware, the mitigations fall into 3 different categories:

- Hypervisor-Specific Mitigation

- Hypervisor-Assisted Guest Mitigation

- Operating System-Specific Mitigations

This Knowledge Base article will be updated as new information becomes available.

Introduction

On January 3, 2018, it became public that CPU data cache timing can be abused by software to efficiently leak information out of mis-speculated CPU execution, leading to (at worst) arbitrary virtual memory read vulnerabilities across local security boundaries in various contexts. Three variants have been recently discovered by Google Project Zero and other security researchers; these can affect many modern processors, including certain processors by Intel, AMD and ARM:

- Variant 1: bounds check bypass (CVE-2017-5753) – a.k.a. Spectre

- Variant 2: branch target injection (CVE-2017-5715) – a.k.a. Spectre

- Variant 3: rogue data cache load (CVE-2017-5754) – a.k.a. Meltdown

Operating systems (OS), virtual machines, virtual appliances, hypervisors, server firmware, and CPU microcode must all be patched or upgraded for effective mitigation of these known variants. General purpose operating systems are adding several mitigations for them. Most operating system mitigations can be applied to unpatched CPUs (and hypervisors) and will significantly reduce the attack surface. However, some operating system mitigations will be more effective when a new speculative-execution control mechanism is provided by updated CPU microcode (and virtualized to VMs by hypervisors). There can be a performance impact when an operating system applies the above mitigations; consult the specific OS vendor for more details.

Hypervisor-Specific Mitigation

Mitigates leakage from the hypervisor or guest VMs into a malicious guest VM. VMware’s hypervisor products are affected by the known examples of variant 1 and variant 2 vulnerabilities and do require the associated mitigations. Known examples of variant 3 do not affect VMware hypervisor products.

VMware hypervisors do not require the new speculative-execution control mechanism to achieve this class of mitigation and therefore these types of updates can be installed on any currently supported processor. For the latest information on any VMware performance impact, see KB 52337.

Hypervisor-Assisted Guest Mitigation

It virtualizes the new speculative-execution control mechanism for guest VMs so that a Guest OS can mitigate leakage between processes within the VM. This mitigation requires that specific microcode patches that provide the mechanism are already applied to a system’s processor(s) either by ESXi or by a firmware/BIOS update from the system vendor. The ESXi patches for this mitigation will include all available microcode patches at the time of release and the appropriate one will be applied automatically if the system firmware has not already done so.

No significant additional overhead is expected by virtualizing the speculative-execution control mechanism in the hypervisor. There can be a performance impact when an operating system applies this mitigation; consult the specific OS vendor for more details.

Operating System-Specific Mitigations

Mitigations for Operating Systems(OSes) are provided by your OS Vendors. In the case of virtual appliances, your virtual appliance vendor will need to integrate these into their appliances and provide an updated appliance.

VMware Software-as-a-Service (SaaS) Status Updates

VMware is in the process of investigating and patching its services. The current status is found in the Resolution section.

Resolution

Documentation Timeline

Specific versions of VMware vSphere ESXi (5.5, 6.0, 6.5, VMC), VMware Workstation (12.x, 14.x), and VMware Fusion (8.x, 10.x) have already been updated with hypervisor-specific mitigation as indicated in further detail by VMSA-2018-0002.

Please note that all provided VMware hypervisor-specific mitigations mentioned in this Knowledge Base article can only address known examples of the variant 1 and variant 2 vulnerabilities; known variant 3 examples do not affect VMware hypervisors. VMware will remain vigilant in updating our mitigations as new speculative-execution vulnerabilities are uncovered and as new CPU vendor microcode becomes available.

Hypervisor-Assisted Guest Mitigation

Important: Please review KB52345 for important information on Intel microcode issues that shipped with ESXi patches.

Hypervisor-Assisted Guest Mitigation patches are available. Mitigation requirements including patches have been announced in VMSA-2018-0004. Detailed instructions on enabling Hypervisor-Assisted Guest Mitigation can be found in Hypervisor-Assisted Guest Mitigation for branch target injection (52085).

Operating System-Specific Mitigations

VMware Virtual Appliances

VMware virtual appliance information can be found here: https://kb.vmware.com/s/article/52264

Photon OS

Photon OS has begun releasing fixes which are documented in Photon OS Security Advisories.

PHSA-2018-1.0-0097

PHSA-2018-2.0-0010

PHSA-2018-1.0-0098

PHSA-2018-2.0-0011

VMware products that are installed and run on Windows

VMware products that run on Windows might be affected if Windows has not been patched with appropriate updates. VMware recommends that customers contact Microsoft for resolution.

VMware products that are installed and run on Linux (excluding virtual appliances), Mac OS, iOS or Android

VMware products that run on Linux (excluding virtual appliances), Mac OS, iOS, or Android might be affected if the operating system has not been patched with appropriate updates. VMware recommends that customers contact their operating system vendor for resolution.

VMware Software-as-a-Service (SaaS) Status Updates

Air-Watch

https://support.air-watch.com/articles/115015960907

https://support.air-watch.com/articles/115015960887

VMware Horizon Cloud

http://status.horizon.vmware.com/incidents/nd1ry9frbkvq

VMware Cloud on AWS

https://status.vmware-services.io

VMware Identity Manager SaaS

http://status.vmwareidentity.com

- 01/03/18: VMware Security Advisory Published - VMSA-2018-0002

- 01/05/18: KB52264 Published - VMware Virtual Appliances and CVE-2017-5753, CVE-2017-5715 (Spectre), CVE-2017-5754 (Meltdown)

- 01/08/18: KB52245 Published - VMware Response to Speculative Execution security issues, CVE-2017-5753, CVE-2017-5715, CVE-2017-5754 (aka Spectre and Meltdown)

- 01/09/18: VMware Security Advisory Major Update - VMSA-2018-0002.1

- 01/09/18: VMware Security Advisory Published - VMSA-2018-0004

- 01/09/18: KB52085 Published - Hypervisor-Assisted Guest Mitigation for branch target injection

- 01/10/18: VMware Security Advisory Major Update - VMSA-2018-0004.1

- 01/12/18: KB52337 Published - VMware Performance Impact for CVE-2017-5753, CVE-2017-5715, CVE-2017-5754 (aka Spectre and Meltdown)

- 01/12/18: VMware Security Major Update - VMSA-2018-0004.2

- 01/12/18: KB52345 Published - Intel Sightings in ESXi Bundled Microcode Patches for VMSA-2018-0004

- 01/13/18: VMware Security Advisory Major Update - VMSA-2018-0002.2

Specific versions of VMware vSphere ESXi (5.5, 6.0, 6.5, VMC), VMware Workstation (12.x, 14.x), and VMware Fusion (8.x, 10.x) have already been updated with hypervisor-specific mitigation as indicated in further detail by VMSA-2018-0002.

Please note that all provided VMware hypervisor-specific mitigations mentioned in this Knowledge Base article can only address known examples of the variant 1 and variant 2 vulnerabilities; known variant 3 examples do not affect VMware hypervisors. VMware will remain vigilant in updating our mitigations as new speculative-execution vulnerabilities are uncovered and as new CPU vendor microcode becomes available.

Hypervisor-Assisted Guest Mitigation

Important: Please review KB52345 for important information on Intel microcode issues that shipped with ESXi patches.

Hypervisor-Assisted Guest Mitigation patches are available. Mitigation requirements including patches have been announced in VMSA-2018-0004. Detailed instructions on enabling Hypervisor-Assisted Guest Mitigation can be found in Hypervisor-Assisted Guest Mitigation for branch target injection (52085).

Operating System-Specific Mitigations

VMware Virtual Appliances

VMware virtual appliance information can be found here: https://kb.vmware.com/s/article/52264

Photon OS

Photon OS has begun releasing fixes which are documented in Photon OS Security Advisories.

PHSA-2018-1.0-0097

PHSA-2018-2.0-0010

PHSA-2018-1.0-0098

PHSA-2018-2.0-0011

VMware products that are installed and run on Windows

VMware products that run on Windows might be affected if Windows has not been patched with appropriate updates. VMware recommends that customers contact Microsoft for resolution.

VMware products that are installed and run on Linux (excluding virtual appliances), Mac OS, iOS or Android

VMware products that run on Linux (excluding virtual appliances), Mac OS, iOS, or Android might be affected if the operating system has not been patched with appropriate updates. VMware recommends that customers contact their operating system vendor for resolution.

VMware Software-as-a-Service (SaaS) Status Updates

Air-Watch

https://support.air-watch.com/articles/115015960907

https://support.air-watch.com/articles/115015960887

VMware Horizon Cloud

http://status.horizon.vmware.com/incidents/nd1ry9frbkvq

VMware Cloud on AWS

https://status.vmware-services.io

VMware Identity Manager SaaS

http://status.vmwareidentity.com

Subscribe to:

Comments (Atom)