A transport node is a node that participates in an NSX-T Data Center overlay or NSX-T Data Center VLAN networking.

If you missed previous parts in this blogpost series. Here is the Links:-

Part - 1

Part - 2

Part - 3

Part - 4

Part - 5

Part - 6

How to Promote ESXi Hosts as Transport Node (Fabric Node)

Prerequisites

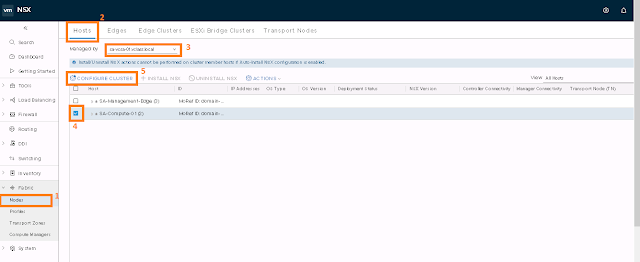

1. Fabric > Nodes > Hosts > Select vCenter Server > Configure Cluster > Select the Cluster which you want to prepare.

2. Configure the required details

3. Verify Manager Connectivity, Controller Connectivity and Deployment Status.

4. As i have enabled auto creation of Transport Node in Step No. 2, Result is these ESXi Server Would be listed as Transport Node under the Transport Nodes tab.

How to Promote KVM Hosts as Transport Node (Fabric Node)

1. First Prepare KVM Nodes with MPA (Management Plane Agent)

Fabric > Nodes > Hosts > Standalone Hosts > Add > Provide the details of KVM Node

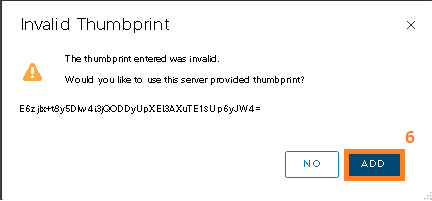

2. Click on Add on Thumbprint Page.

3. Likewise add the Second KVM Node too

4. Now Promote these KVM Nodes as Transport Nodes

Fabric > Nodes > Transport Nodes > Add

If you missed previous parts in this blogpost series. Here is the Links:-

Part - 1

Part - 2

Part - 3

Part - 4

Part - 5

Part - 6

How to Promote ESXi Hosts as Transport Node (Fabric Node)

Prerequisites

- The host must be joined with the management plane, and MPA connectivity must be Up on the Fabric > Hosts page.

- A transport zone must be configured.

- An uplink profile must be configured, or you can use the default uplink profile.

- An IP pool must be configured, or DHCP must be available in the network deployment.

- At least one unused physical NIC must be available on the host node.

1. Fabric > Nodes > Hosts > Select vCenter Server > Configure Cluster > Select the Cluster which you want to prepare.

2. Configure the required details

3. Verify Manager Connectivity, Controller Connectivity and Deployment Status.

4. As i have enabled auto creation of Transport Node in Step No. 2, Result is these ESXi Server Would be listed as Transport Node under the Transport Nodes tab.

How to Promote KVM Hosts as Transport Node (Fabric Node)

1. First Prepare KVM Nodes with MPA (Management Plane Agent)

Fabric > Nodes > Hosts > Standalone Hosts > Add > Provide the details of KVM Node

2. Click on Add on Thumbprint Page.

3. Likewise add the Second KVM Node too

4. Now Promote these KVM Nodes as Transport Nodes

Fabric > Nodes > Transport Nodes > Add

As a result of adding an ESXi host to the NSX-T fabric, the following VIBs get installed on the host.

- nsx-aggservice—Provides host-side libraries for NSX-T aggregation service. NSX-T aggregation service is a service that runs in the management-plane nodes and fetches runtime state from NSX-T components.

- nsx-da—Collects discovery agent (DA) data about the hypervisor OS version, virtual machines, and network interfaces. Provides the data to the management plane, to be used in troubleshooting tools.

- nsx-esx-datapath—Provides NSX-T data plane packet processing functionality.

- nsx-exporter—Provides host agents that report runtime state to the aggregation service running in the management plane.

- nsx-host— Provides metadata for the VIB bundle that is installed on the host.

- nsx-lldp—Provides support for the Link Layer Discovery Protocol (LLDP), which is a link layer protocol used by network devices for advertising their identity, capabilities, and neighbors on a LAN.

- nsx-mpa—Provides communication between NSX Manager and hypervisor hosts.

- nsx-netcpa—Provides communication between the central control plane and hypervisors. Receives logical networking state from the central control plane and programs this state in the data plane.

- nsx-python-protobuf—Provides Python bindings for protocol buffers.

- nsx-sfhc—Service fabric host component (SFHC). Provides a host agent for managing the lifecycle of the hypervisor as a fabric host in the management plane's inventory. This provides a channel for operations such as NSX-T upgrade and uninstall and monitoring of NSX-T modules on hypervisors.

- nsxa—Performs host-level configurations, such as N-VDS creation and uplink configuration.

- nsxcli—Provides the NSX-T CLI on hypervisor hosts.

- nsx-support-bundle-client - Provides the ability to collect support bundles.

To verify, you can run the esxcli software vib list | grep nsx or esxcli software vib list | grep <yyyy-mm-dd> command on the ESXi host, where the date is the day that you performed the installation.

5. Select the Overlay Transport Zone in general tab

6. Provide the N-VDS details and other details > Add

7. Likewise Promote the Second KVM Node too.

use dpkg -l | grep nsx command to verify NSX Kernel Module in KVM Host

No comments:

Post a Comment