This is one of the very good post available on the blogs.vmware.com. I hope this will be helpful for all of us to understand the Performance Impact of Cores per Socket.

There is a lot of outdated information regarding the use of a vSphere feature that changes the presentation of logical processors for a virtual machine, into a specific socket and core configuration. This advanced setting is commonly known as corespersocket.

It was originally intended to address licensing issues where some operating systems had limitations on the number of sockets that could be used, but did not limit core count.

KB Reference: http://kb.vmware.com/kb/1010184

It’s often been said that this change of processor presentation does not affect performance, but it may impact performance by influencing the sizing and presentation of virtual NUMA to the guest operating system.

Reference Performance Best Practices for VMware vSphere 5.5 (page 44):http://www.vmware.com/pdf/Perf_Best_Practices_vSphere5.5.pdf

Recommended Practices

#1 When creating a virtual machine, by default, vSphere will create as many virtual sockets as you’ve requested vCPUs and the cores per socket is equal to one. I think of this configuration as “wide” and “flat.” This will enable vNUMA to select and present the best virtual NUMA topology to the guest operating system, which will be optimal on the underlying physical topology.

#2 When you must change the cores per socket though, commonly due to licensing constraints, ensure you mirror physical server’s NUMA topology. This is because when a virtual machine is no longer configured by default as “wide” and “flat,” vNUMA will not automatically pick the best NUMA configuration based on the physical server, but will instead honor your configuration – right or wrong – potentially leading to a topology mismatch that does affect performance.

To demonstrate this, the following experiment was performed. Special thanks to Seongbeom for this test and the results.

Test Bed

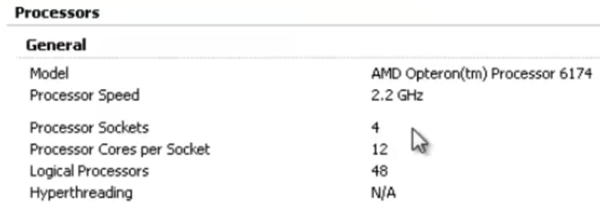

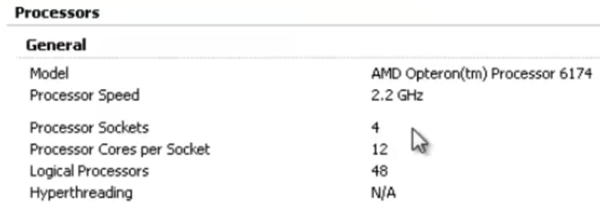

Dell R815 AMD Opteron 6174 based server with 4x physical sockets by 12x cores per processor = 48x logical processors.

The AMD Opteron 6174 (aka Magny-Cours) processor is essentially two 6 core Istanbul processors assembled into a single socket. This architecture means that each physical socket is actually two NUMA nodes. So this server actually has 8x NUMA nodes and not four, as some may incorrectly assume.

Within esxtop, we can validate the total number of physical NUMA nodes that vSphere detects.

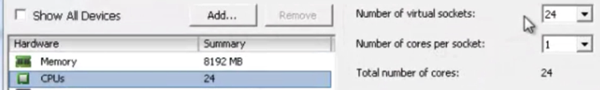

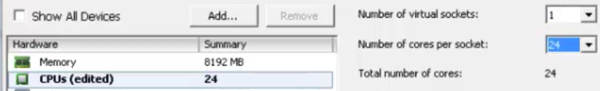

Test VM Configuration #1 – 24 sockets by 1 core per socket (“Wide” and “Flat”)

Since this virtual machine requires 24 logical processors, vNUMA automatically creates the smallest topology to support this requirement being 24 cores, which means 2 physical sockets, and therefore a total of 4 physical NUMA nodes.

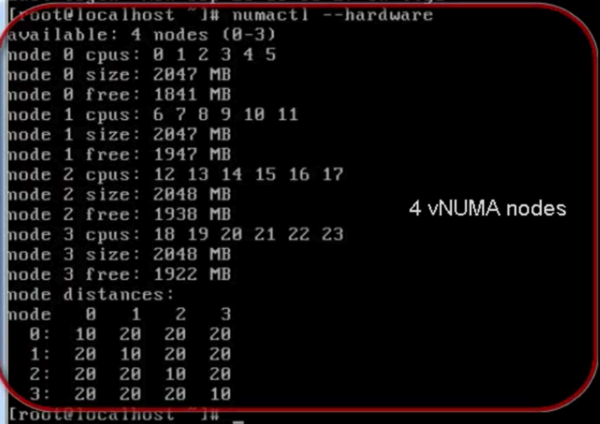

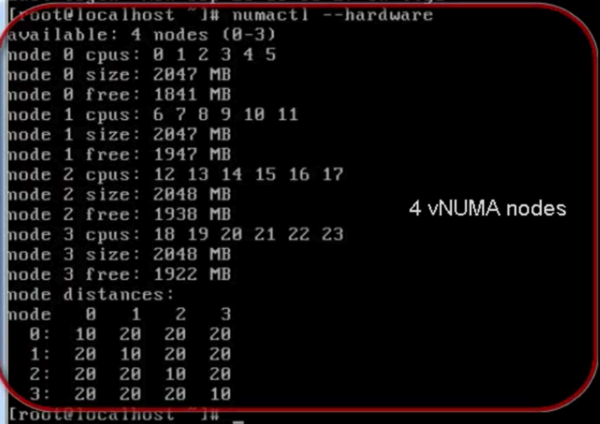

Within the Linux based virtual machine used for our testing, we can validate what vNUMA presented to the guest operating system by using: numactl –hardware

Next, we ran an in-house micro-benchmark, which exercises processors and memory. For this configuration we see a total execution time of 45 seconds.

Next let’s alter the virtual sockets and cores per socket of this virtual machine to generate another result for comparison.

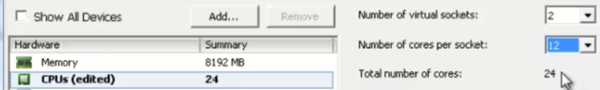

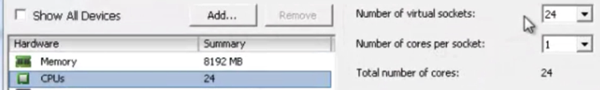

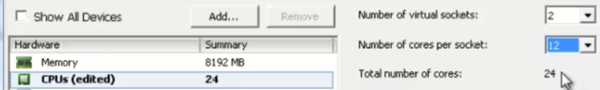

Test VM Configuration #2 – 2 sockets by 12 cores per socket

In this configuration, while the virtual machine is still configured have a total of 24 logical processors, we manually intervened and configured 2 virtual sockets by 12 cores per socket. vNUMA will no longer automatically create the topology it thinks is best, but instead will respect this specific configuration and present only two virtual NUMA nodes as defined by our virtual socket count.

Within the Linux based virtual machine, we can validate what vNUMA presented to the guest operating system by using: numactl –hardware

Re-running the exact same micro-benchmark we get an execution time of 54 seconds.

This configuration, which resulted in a non-optimal virtual NUMA topology, incurred a 17% increase in execution time.

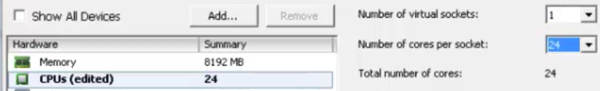

Test VM Configuration #3 – 1 socket by 24 cores per socket

In this configuration, while the virtual machine is again still configured have a total of 24 logical processors, we manually intervene and configured 1 virtual socket by 24 cores per socket. Again, vNUMA will no longer automatically create the topology it thinks is best, but instead will respect this specific configuration and present only one NUMA node as defined by our virtual socket count.

Within the Linux based virtual machine, we can validate what vNUMA presented to the guest operating system by using: numactl –hardware

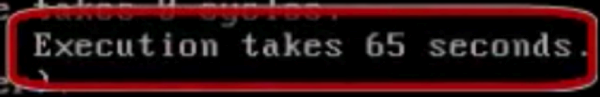

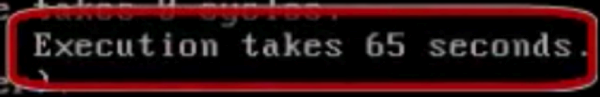

Re-running the micro-benchmark one more time we get an execution time of 65 seconds.

This configuration, with yet a different non-optimal virtual NUMA topology, incurred a 31% increase in execution time.

To summarize, this test demonstrates that changing the corespersocket configuration of a virtual machine does indeed have an impact on performance in the case when the manually configured virtual NUMA topology does not optimally match the physical NUMA topology.

The Takeaway

Always spend a few minutes to understand your physical servers NUMA topology and leverage that when rightsizing your virtual machines.

Other Great References:

Source:-

Thanks to Mark Achtemichuk

No comments:

Post a Comment